PCIe NVMe storage can provide an incredible speed boost to any server but booting from it is not natively supported on 11th generation Dell PowerEdge servers.

11th generation servers like the are very popular amongst the home lab community and could benefit from a fast boot device.

12th Generation servers such as the R720 support booting from NVMe devices if the latest firmware updates have been applied. So if you have a 12th generation server do not follow this guide. Simply update the firmware on your machine.

This procedure should work on any Dell PowerEdge Server that can boot from a USB device.

Booting from NVMe storage is simple to do. In this post I am going to explain how it’s done and show the benchmarks from a Dell PowerEdge R310.

Hardware you will need:

- Two USB Flash drives:

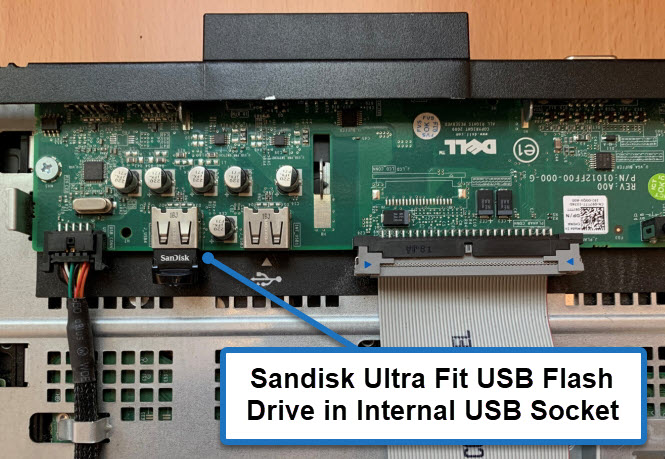

- One to run clover bootloader. I used this tiny Sandisk Ultra Fit Flash Drive.

- One for your bootable Windows ISO.

- A PCI NVMe Adapter and a NVMe Drive:

- I used this cheap NVMe to PCIe adapter from Amazon.

- With a Samsung 970 Evo Plus also from Amazon

I also tested the process on an 1.2Tb Intel DC P3520 PCIe card, which also worked fine.

Software you will need:

- A Windows Server Installation ISO

- Rufus to create the bootable Windows Installation.

- Boot Disk Utility

PCIe NVMe Boot Process

When this procedure is complete, the PowerEdge server will boot from the internal USB storage and run the Clover EFI Bootloader. Clover will contain the NVMe boot driver and boot the installed operating system from the NVMe storage.

If your server has internal SD card storage, you could boot from that instead.

Install the NVMe Adapter and Drive

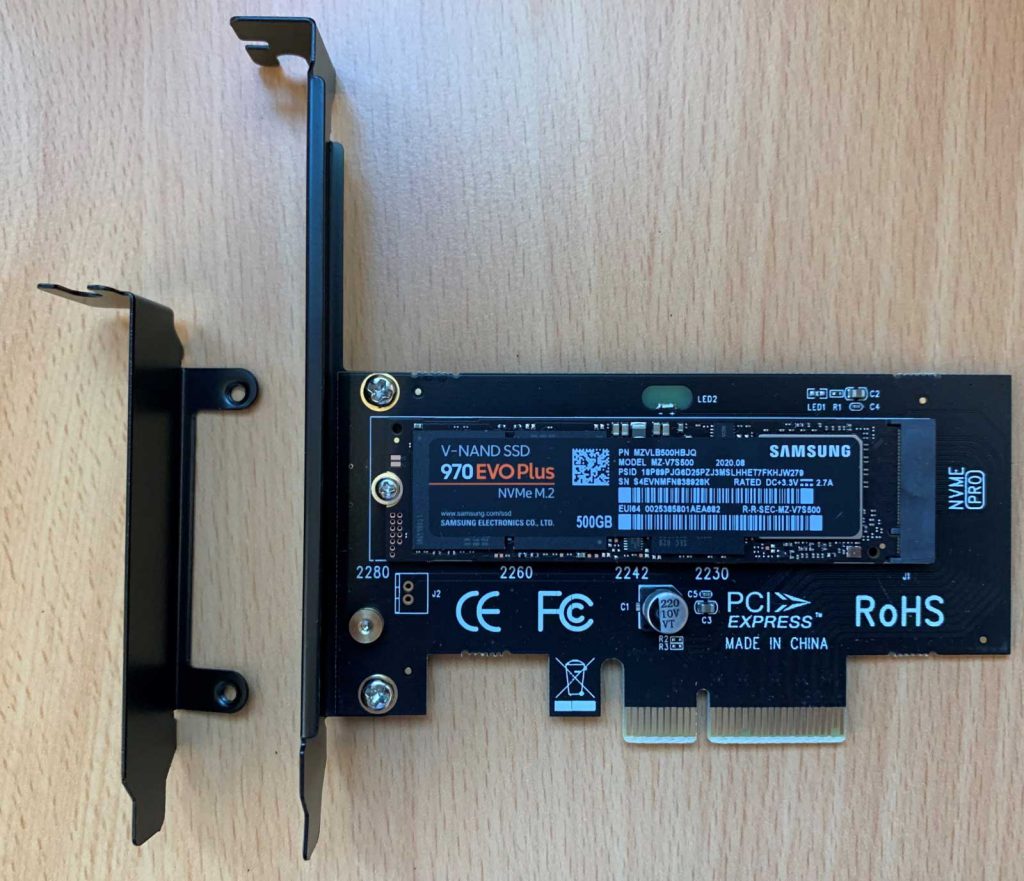

First install the NVMe adapter and drive into your Dell PowerEdge server. I used this cheap adapter from Amazon and a 500Gb Samsung 970 Evo Plus.

Here is the unit before I installed it into the server without the heatsink applied. It comes with regular and low profile PCIe bracket:

And here is the unit installed in the PowerEdge R310 with the heatsink and thermal pad applied:

Create your bootable Windows Server Installation

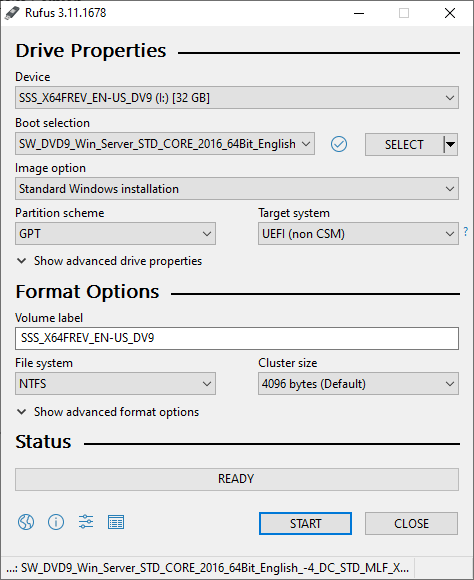

The first step is to create your Windows Server Installation USB Stick. There are lots of guides on how to do this but I will show how I did it.

- Download and Install Rufus.

- Point Rufus to your Windows Server ISO.

- Configure Rufus with the following options:

- Partition Scheme: GPT

- Target System: UEFI (non CSM)

Install Windows in the normal way

Windows Server 2012 R2 and newer have Microsoft NVMe drivers built in, so it will see the NVMe storage and offer to install to that location.

When Windows setup is complete it will reboot. It will be unable to do because the Dell UEFI does not have any NVMe support. But don’t worry about that!

Setup the Clover EFI USB Boot Stick

Now setup the Clover USB Boot stick or SD Card.

- Download and run Boot Disk Utility.

- Insert the USB Stick that you are going to boot from into your PC.

- Select your USB Stick and click format:

- Open your newly formatted drive and copy \EFI\CLOVER\drivers\off\NvmExpressDxe.efi to:

- \EFI\CLOVER\drivers\BIOS

- \EFI\CLOVER\drivers\UEFI

Copying the NvmExpressDxe.efi to the drivers folder adds NVMe support to Clover which will enable booting from the Windows Installation that has just been completed.

My \EFI\CLOVER\drivers\UEFI looks like this:

Insert the Clover USB Flash Drive or SD Card into your server

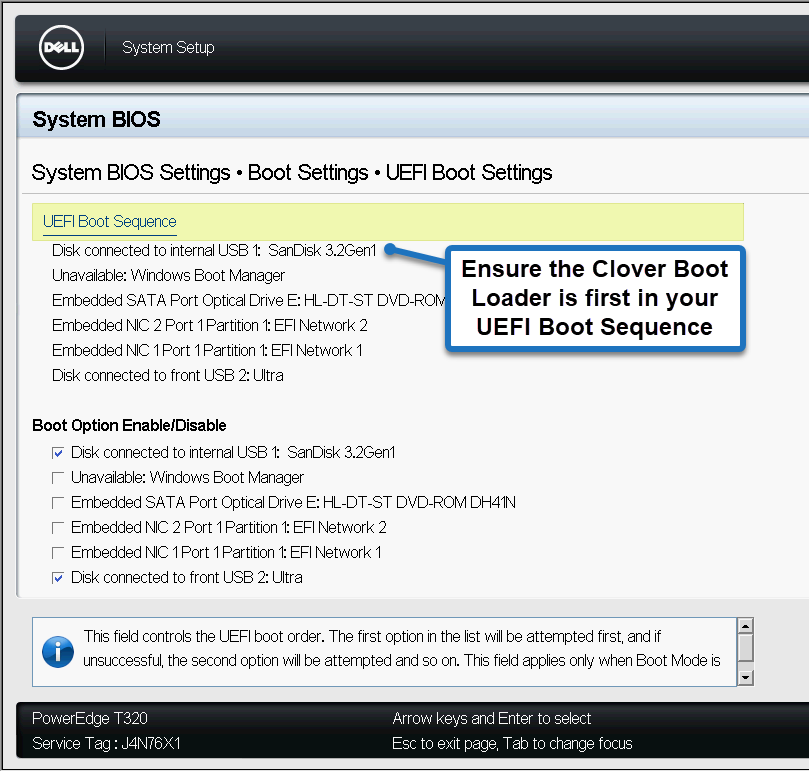

Next simply insert the USB flash drive or SD Card into your server and set the UEFI boot order on the server to boot from it:

Ensure your UEFI Boot order is set correctly and pointing to your Clover USB Stick or SD Card:

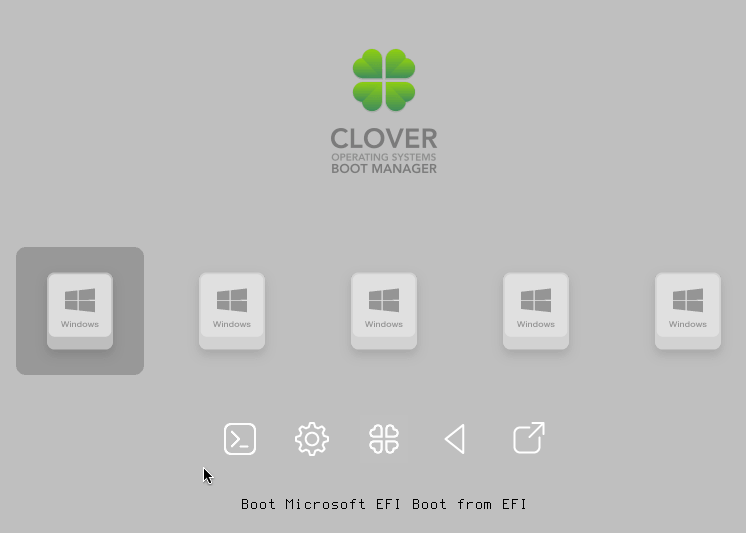

When booting from the internal Clover USB stick it will briefly display a boot screen:

The clover defaults worked right away for me and I didn’t have to configure anything.

You can modify the config.plist file (which is in the root of the USB Stick) to reduce the timeout if you want to speed things up a little bit:

Modify the “integer” value on line 36 to reduce the boot delay.

Windows should now proceed to boot normally directly from the NVMe drive.

Performance Results

I was really impressed with the performance improvement when booting from the NVMe drive. For the purposes of clarity the config of this system is:

Dell PowerEdge R310

Intel XEON X3470 2.93GHz

16Gb Ram

Dell PERC H700 (512mb)

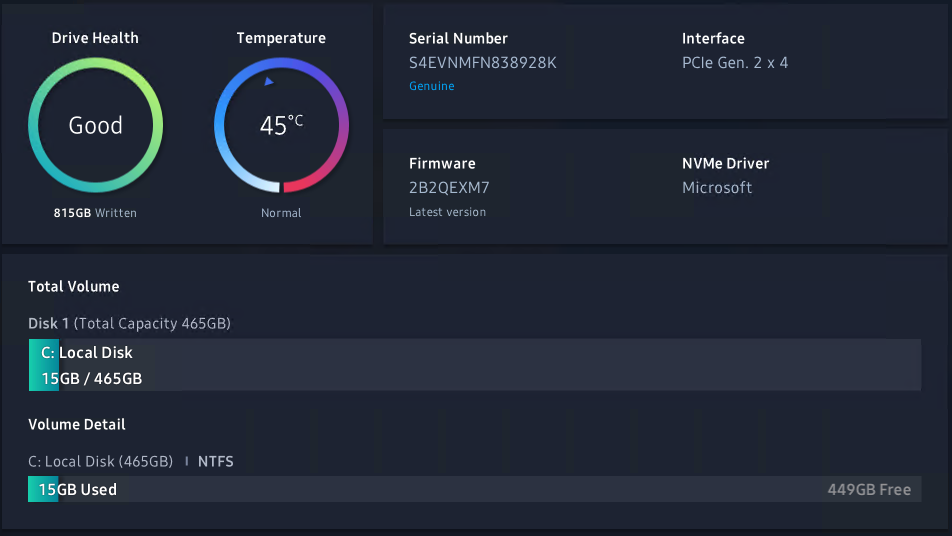

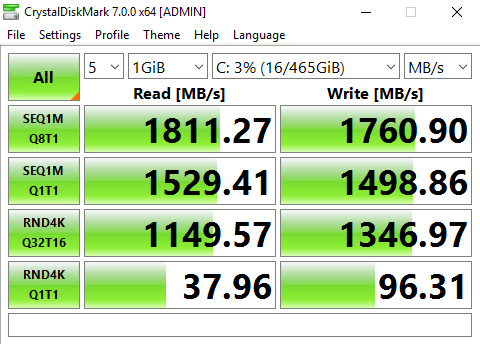

Performance of the Samsung 970 Evo Plus NVMe Drive is excellent. But the drive performance is constrained in the R310 because it has a PCI Gen 2 x 4.

Disabling C States in the BIOS increases performance a little bit.

Here are the results from a CrystalDiskMark from the R310 with C States Disabled:

Here are all the results from both machines with and without C States Enabled.

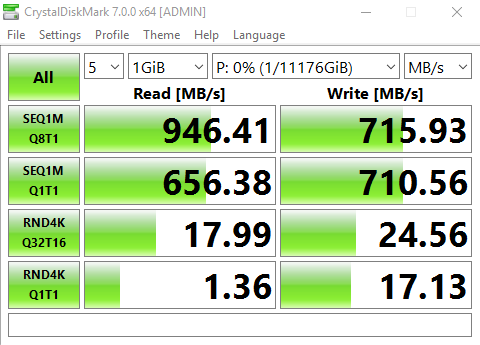

As a crude comparison here is the performance of a RAID 0 Array in the R310 comprising 4 x 7,200 RPM SATA Drives:

This R310 server also has a Samsung 860 EVO SSD in the DVD Drive bay, which is connected via a SATA 2 port on the motherboard:

You can see the performance of the drive being constrained by the SATA2 port, but it still gives good random performance.

If you are using VMWare then you can just access the NVMe drive in the normal way if you are booting from a different storage device such as SD Card or USB Stick.

Conclusion – is it worth adding NVMe storage to a old Dell PowerEdge?

Given the low cost of both the adapter and Samsung SSD and the huge resulting performance boost, it is certainly worth experimenting.

I can’t say if I would use this setup in production yet, but so far, it seems to work fine. Here is an image of Samsung Magician Drive information: